Compressed sensing (or compressive sensing) is a relatively new technique that is becoming more and more important in image and signal acquisition. The term originates from image compression, where, in the classical workflow, images are first recorded normally, and then compressed. The idea to perform compressed sensing stems from the way in which modern image compression algorithms work. They transform the image into a domain in which the image is sparse, which means that they create a representation of the image with only a limited number of nonzero coefficients. The compression is then achieved simply by only storing the largest coefficients. Compressed sensing bypasses this compression step.

Stars!

The goal for this article is to introduce compressed sensing in the simplest possible way. I’ll therefore use an example for which a transform into a domain where the image is sparse is not necessary, namely stars. In the example of Figure 1 (left image), it is clear that only a small number of pixels is nonzero. In a future article, I’ll introduce an example with a proper wavelet transform and sampling with, e.g., noiselets, instead of this simplified approach. The image is 50×50 pixels (magnified four times for clarity). It contains 50 stars with four different intensities, where each star is a single pixel. It is clear that, in the classical sampling approach such as your DSLR camera uses, each pixel must be sampled to detect all the stars. Skip one and you can’t tell whether there is a star there or not. How can we get around this seemingly hard limit?

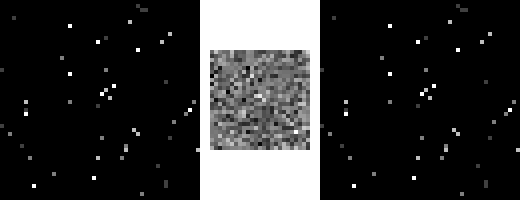

Figure 1. Compressed sensing example with stars. Original image (left), measurements (middle), reconstructed image (right).

Figure 1. Compressed sensing example with stars. Original image (left), measurements (middle), reconstructed image (right).The basic idea that makes compressed sensing work, is to acquire measurements that combine the values of many pixels in a single number. In this simple example, I’ve created measurements by randomly flipping the sign of the image pixels and summing them. Of course, you then have to remember exactly how you’ve made each measurement, and then reconstruct the image from that. The model for this sampling operation is a system of linear equations,

\[{\bf Ax}={\bf b},\]

where \({\bf x}\) is the image, formatted as a one-dimensional vector. Since this is a matrix product, each row of the matrix \({\bf A}\) represents a single measurement. In my example, each row consists of ones and minus ones, indicating exactly how pixels are summed together. The vector \({\bf b}\) contains the measurements (625 elements in this example, which is 25% of the total number of pixels). The measurements are shown in the middle image (also magnified four times for clarity), formatted as a square to make it fit on the page and to demonstrate visually that the number of measurements is only one fourth of the number of pixels.

Reconstruction

Given the recorded measurements \({\bf b}\), finding the original image again is then a minimization problem. For this example, I’ve used

\[{\bf\hat{x}}=\underset{{\bf x}}{\operatorname{arg\,min}}\,\|{\bf Ax}-{\bf b}\|^2+\lambda\|{\bf x}\|_1\]

The arg min operator returns the image \({\bf x}\) that minimizes the expression. The formula consists of two parts. The first part, \(\|{\bf Ax}-{\bf b}\|^2\) makes sure that the solution is consistent with the data, and is a simple least squares term. The second part, \(\lambda\|{\bf x}\|_1\), is where the magic happens. This term minimizes the sparsity of the solution, by selecting the solution with the smallest sum of absolute values, through minimizing the \(l_1\) (or Manhattan) norm, given by

\[\|{\bf x}\|_1\equiv\sum_{i=1}^{n} |x_i|.\]

The parameter \(\lambda\) provides a trade-off between the least squares and the sparsity parts of the expression.

ISTA

You can minimize a function like this in many different ways. For this example, I’ve used the ISTA algorithm (Iterative Shrinkage-Thresholding Algorithm). I’ll briefly show how the ISTA algorithm works, but the full background on why it works would lead me too far, so I won’t go into details.

The algorithm starts with a zero image, \({\bf x}^{(0)}={\bf 0}\), and then iteratively performs the update step given by

\[{\bf x}^{(t+1)}=\mathcal{T}_{\lambda s}(G({\bf x}^{(t)})),\]

where the least squares minimization is done by

\[G({\bf x}^{(t)})\equiv{\bf x}^{(t)}-2s{\bf A}^\mathrm{T}({\bf Ax}^{(t)}-{\bf b}),\]

where \({\bf s}\) is a fixed step size. Sparsity is then encouraged by \(\mathcal{T}_\alpha:\mathbb{R}^n\to\mathbb{R}^n\), the shrinkage operator, which is defined as

\[\mathcal{T}_\alpha({\bf x})_i\equiv\max\{0,|x_i|-\alpha\}\operatorname{sgn}(x_i).\]

These formulas may look a bit complicated, but a straightforward implementation in MATLAB or Python is really only a few lines of code. The final result is that a number of measurements that is only 25% of the number of pixels is enough to reconstruct the image almost perfectly (see the right image in Figure 1).

Add new comment